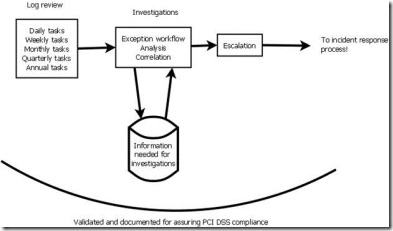

Once upon a time, I was retained to create a comprehensive

PCI DSS-focused log review policies, procedures and practices for a large company. As I am preparing to handle more of such engagements (including ones not focused on PCI DSS, but covering other compliance or purely security log reviews), I decided to publish a

heavily sanitized version of that log review guidance as a long blog post series, tagged “

PCI_Log_Review.” It is focused on PCI DSS, but based on generally useful log review practices that can be utilized by everybody and with any regulation or without any compliance flavor at all.

This is the eighth post in the long, long series (

part 1,

part 2,

part 3 –

all parts). A few tips on how you can use it in your organization can be found in

Part 1. You can also

retain me to customize or adapt it to your needs.

And so we continue with our Complete PCI DSS Log Review Procedures:

To build a baseline without using a log management tool has to be done when logs are not compatible with an available tool or the available tool has poor understanding of log data (text indexing tool). To do it, perform the following:

1. Make sure that relevant logs from a PCI application are saved in one location

2. Select a time period for an initial baseline: “90 days” or “all time” if logs have been collected for less than 90 days; check the timestamp on the earliest logs to determine that

3. Review log entries starting from the oldest to the newest, attempting to identify their types

4. Manually create a summary of all observed types; if realistic, collect the counts of time each message was seen (not likely in case of high log data volume)

5. Assuming that no breaches of card data have been discovered in that time period , we can accept the above report as a baseline for “routine operation”

6. An additional step should be performed while creating a baseline: even though we assume that no compromise of card data has taken place, there is a chance that some of the log messages recorded over the 90 day period triggered some kind of action or remediation. Such messages are referred to as “known bad” and should be marked as such.

Here is an example process of the above, performed on a Windows system in-scope for PCI DSS that also contains PCI DSS application called “SecureFAIL.”

1. Make sure that relevant logs from a PCI application are saved in one location

First, verify Windows event logging is running:

a. Go to “Control Panel”, click on “Administrative Tools”, click on “Event Viewer”

b. Right-click on “Security Log”, select “Properties.” The result should match this:

c. Next, review audit policy

Second, verify SecureFAIL dedicated logging:

a. Go to “C:\Program Files\SecureFAIL\Logs”

b. Review the contents of the directory, it should show the following:

c. Next, review audit policy

Second, verify SecureFAIL dedicated logging:

a. Go to “C:\Program Files\SecureFAIL\Logs”

b. Review the contents of the directory, it should show the following:

2. Select a time period for an initial baseline: “90 days” or “all time” if logs have been collected for less than 90 days; check the timestamp on the earliest logs to determine that

a. Windows event logs: available for 30 days on the system, might be available for longer

b. SecureFAIL logs: available for 90 days on the system, might be available for longer

Baselining will be performed over last 30 days since data is available for 30 days only.

3. Review log entries starting from the oldest to the newest, attempting to identify their types

a. Review all using MS LogParser tool (can be obtained http://www.microsoft.com/downloads/details.aspx?FamilyID=890cd06b-abf8-4c25-91b2-f8d975cf8c07&displaylang=en).

Run the tool as follows:

C:\Tools\LogParser.exe "SELECT SourceName, EventCategoryName, Message INTO event_log_summary.txt GROUP BY EventCategoryName FROM Security'" -resolveSIDs:ON

and review the resulting summary of event types.

b. Open the file “secureFAIL_log-082009.txt” in notepad and review the entries. LogParser tool above may also be used to analyze logs in plain text files (detailed instructions on using the tool fall outside the scope of this document)

4. Manually create a summary of all observed types; if realistic, collect the counts of time each message was seen (not likely in case of high log data volume)

This step is the same as when using the automated tools – the baseline is a table of all event types as shown below:

| Event ID | Event Description | Count | Average Count/day |

| 1517 | Registry failure | 212 | 2.3 |

| 562 | Login failed | 200 | 2.2 |

| 563 | Login succeeded | 24 | 0.3 |

| 550 | User credentials updated | 12 | 0.1 |

| 666 | Memory exhausted | 1 | 0.0 |

Assuming that no breaches of card data have been discovered in that time period, we can accept the above report as a baseline for “routine operation.” However, during the first review it logs, it might be necessary to investigate some of the logged events before we accept them as normal (such as the last even in the table). The next step explains how this is done.

5. An additional step should be performed while creating a baseline: even though we assume that no compromise of card data has taken place, there is a chance that some of the log messages recorded over the 90 day period triggered some kind of action or remediation. Such messages are referred to as “known bad” and should be marked as such.

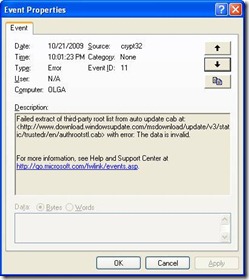

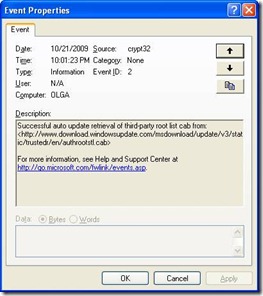

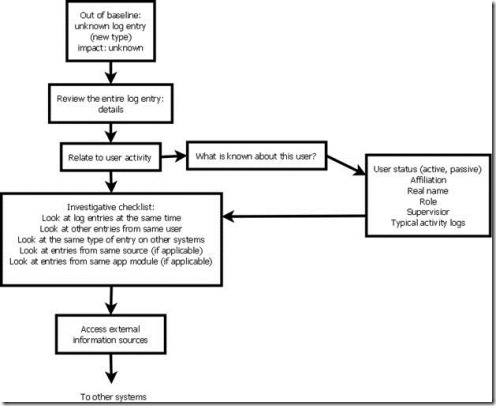

Same as when using the automated log management tools, we notice the last line, the log record with an event ID = 666 and event name “Memory exhausted” that only occurred once during the 90 day period. Such rarity of the event is at least interesting; the message description (“Memory exhausted”) might also indicate a potentially serious issue and thus needs to be investigated as described below in the investigative procedures.

What are some of the messages that will be “known bad” for most applications?

The following are some rough guidelines for marking some messages as “known bad” during the process of creating the baseline. If generated, these messages will be looked at first during the daily review process. MANY site-specific messages might need to be added but this provides a useful starting point.

1. Login and other “access granted” log messages occurring at unusual hour

[1]

2. Credential and access modifications log messages occurring outside of a change window

3. Any log messages produced by the expired user accounts

4. Reboot/restart messages outside of maintenance window (if defined)

5. Backup/export of data outside of backup windows (if defined)

6. Log data deletion

7. Logging termination on system or application

8. Any change to logging configuration on the system or application

9. Any log message that has triggered any action in the past: system configuration, investigation, etc

10. Other logs clearly associated with security policy violations.

As we can see, this list is also very useful for creating “what to monitor in near-real-time?” policy and not just for logging. Over time, this list should be expanded based on the knowledge of local application logs and past investigations.

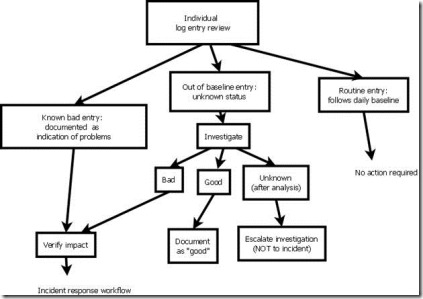

After we built the initial baselines we can start the daily log review.

[1] Technically, this also requires a creation of a baseline for better accuracy. However, logins occurring outside of business hours (for the correct time zone!) are typically at least “interesting” to review.

To be continued.

Follow

PCI_Log_Review to see all posts.

Possibly related posts:

![clip_image002[5] clip_image002[5]](http://lh6.ggpht.com/_eCy8mZux-aI/TQ-eMe8BRZI/AAAAAAAANDE/MAxfeovZ_GU/clip_image002%5B5%5D_thumb%5B1%5D.jpg?imgmax=800)

![clip_image002[5] clip_image002[5]](http://lh5.ggpht.com/_eCy8mZux-aI/TP09zGIXwvI/AAAAAAAAM-8/ZEi_HD3wkEc/clip_image002%5B5%5D_thumb%5B1%5D.jpg?imgmax=800)