The long-awaited PCI Council guidance on virtualization has been released [PDF]. Congrats to the Virtualization SIG for the mammoth effort! I rather liked the document, but let the virtualization crowd (and press!) analyze it ad infinitum – I’d concentrate elsewhere: on the cloud! This guidance does not focus on cloud computing, but contains more than a few mentions, all of them pretty generic.

Here are some of the highlights and my thoughts on them.

Section 2.2.6 “Cloud Computing” does contain some potentially usable (if obvious) scope guidance:

“Entities planning to use cloud computing for their PCI DSS environments should first ensure that they thoroughly understand the details of the services being offered, and perform a detailed assessment of the unique risks associated with each service. Additionally, as with any managed service, it is crucial that the hosted entity and provider clearly define and document the responsibilities assigned to each party for maintaining PCI DSS requirements and any other controls that could impact the security of cardholder data.“ [emphasis by A.C.]

Now, after spending the last few months working on a training class on PCI DSS in the cloud for Cloud Security Alliance (in fact, I am still finishing the exercises for our July 8 beta run), the above sounds like a total no-brainer. However, I know A LOT of merchants “plan” to make the mistake of “we use PCI-OK cloud provider –> then we are compliant”, which is obviously completely insane (just as PA-DSS payment app does not make you PCI DSS compliant…and never did).

Further, the council guidance clarifies the above with:

”The cloud provider should clearly identify which PCI DSS requirements, system components, and services are covered by the cloud provider’s PCI DSS compliance program. Any aspects of the service not covered by the cloud provider should be identified, and it should be clearly documented in the service agreement that these aspects, system components, and PCI DSS requirements are the responsibility of the hosted entity [aka “merchant” – A.C.] to manage and assess. The cloud provider

should provide sufficient evidence and assurance that all processes and components under their control are PCI DSS compliant.” [emphasis in bold by A.C.]

The above is actually a gem, a nicely condensed version of a pile of challenges and hard problems, all nicely summarized. Indeed, “PCI in the cloud” is largely about the above paragraph, but … there is A LOT OF DEVIL in the details ![]() I’d like to draw your attention to the fact that providers have to “provide sufficient evidence and assurance” as opposed to just saying “we got PCI Level 1.” There is a big lesson for cloud providers in it…

I’d like to draw your attention to the fact that providers have to “provide sufficient evidence and assurance” as opposed to just saying “we got PCI Level 1.” There is a big lesson for cloud providers in it…

In further sections (section 4.3, mostly), there is some additional useful guidance, such as:

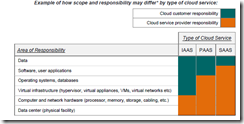

“In a public cloud, some part of the underlying systems and infrastructure is always controlled by the cloud service provider. The specific components remaining under the control

of the cloud provider will vary according to the type of service—for example, Infrastructure as a Service (IaaS), Platform as a Service (PaaS) and Software as a Service (SaaS). […] Physical separation between tenants is not practical in a public cloud environment because, by its very nature, all resources are shared by everyone.” [emphasis by A.C. again; this reminds us that PCI does NOT in fact require such ‘physical’ separation of assets]

On top of this, the Council folks also highlight some of the additional cloud security challenges, affecting PCI DSS, such as (page 24, section 4.3):

- “The hosted entity has limited or no visibility into the underlying infrastructure and related security controls.

- “The hosted entity has no knowledge of ―who‖ they are sharing resources with, or the potential risks their hosted neighbors may be introducing to the host system, data stores, or other resources shared across a multi-tenant environment.” [the section in bold is kind of a hidden big deal! think about it – your payment environment may blow up since your cloud neighbor just annoyed LulzSec by something they said on Twitter…]

The guidance counters these and other challenges with additional controls:

“In a public cloud environment, additional controls must be implemented to compensate for the inherent risks and lack of visibility into the public cloud architecture. A public cloud environment could, for example, host hostile out-of-scope workloads on the same virtualization infrastructure as a cardholder data environment. More stringent preventive, detective, and corrective controls are required to offset the additional risk that a public cloud, or similar environment, could introduce to an entity’s CDE.” [notice MUST, not “may” or “should”; also notice REQUIRED and not “suggested” or “oh wow, would it be nice if…”

]

And if you don’t have such additional controls, then: “These challenges may make it impossible for some cloud-based services to operate in a PCI DSS compliant manner.”

In any case, it was definitely a fun and useful read; hopefully future detailed guidance on PCI in the cloud is coming (given that virtualization SIG took a few years, I am looking forward to 2017 or later here)

BTW, my PCI DSS in the cloud training class will happen on July 8 in Bay Area and you can still sign up.